Big data security

The security and protection of sensitive data is a major concern of data management today. To assist users in protecting their data, Pentaho provides two methods of implementing security for the Pentaho Server: Kerberos authentication and secure impersonation. Both methods are secure, and require a user to provide proof of identity to securely connect to a cluster. Also see Pentaho and CDP security if you are using a Knox security gateway along with Kerberos authentication for your Cloudera Data Platform (CDP) cluster.

Kerberos authentication versus secure impersonation

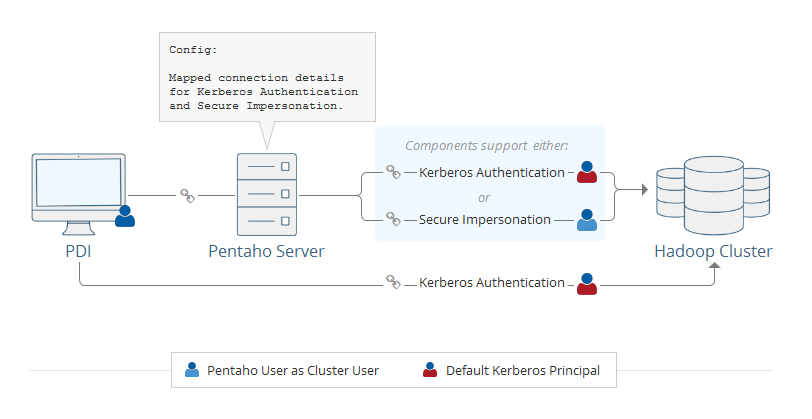

Kerberos authentication occurs when Pentaho users connect to a cluster with a default Kerberos principal. All the Pentaho users connect to the cluster with the same Kerberos principal.

In secure impersonation, users connect to the cluster as a proxy user. The Pentaho user ID matches the cluster user ID in a one-on-one mapping. Resources are accessed and processes are executed as the cluster user.

Secure impersonation occurs if the following criteria are met:

- Pre-defined credentials can authenticate to a Kerberos server before connecting to the cluster.

- The Pentaho Server is configured for secure impersonation.

- The cluster is secured.

Prerequisites

For secure impersonation to work, the following conditions also must be met:

- The cluster must be configured with Kerberos security.

- The cluster must have a Kerberos principal that represents Pentaho that can authenticate to the cluster services and have privileges to impersonate.

- Job or transformation requests must come from the Pentaho Server to the cluster to use secure impersonation.

- The cluster components must support secure impersonation.

Pentaho support for secure impersonation is determined by whether the Hadoop components support secure impersonation. If the Hadoop component does not support secure impersonation, then Kerberos authentication is used.

Supported components

These components are currently only supported for secure impersonation.

| Component | Secure Impersonation Support? |

| Cloudera-Impala | Yes |

| HBase | Yes |

| HDFS | Yes |

| Hadoop MapReduce | Yes |

| Hive | Yes |

| Oozie | Yes |

| Pentaho MapReduce (PMR) NoteWhen running a Pentaho Map Reduce job, you can securely connect to Hive and HBase within the mapper, reducer, or combiner. | Yes |

| Pig Executor | Yes |

| Carte on Yarn | No |

| Impala | No |

| Sqoop | No |

| Spark SQL | No |

Secure impersonation directly from the following is not supported:

- PDI client

- Scheduled Jobs and Transformations

- Pentaho Report Designer

- Pentaho Metadata Editor

- Kitchen

- Pan

- Carte

When you run a transformation or job to run against the cluster from the PDI client, you cannot use secure impersonation. However, if you execute that job or transformation you created in the PDI client from the Pentaho Server, then you can use secure impersonation.

How to enable secure impersonation

Here is how to enable secure impersonation and how the Pentaho Server processes that request. The mapping value

simple in the driver configuration file turns on the secure impersonation.

This value is set when you specify impersonation settings while creating a named connection.

See Connecting to a Hadoop cluster with the

PDI client for instructions creating a named connection.

Understanding secure impersonation

When the Pentaho Server starts, it verifies the mapping type value in the configuration file. If the value is disabled or blank, then the server does not use authentication when connecting to the cluster. The Pentaho Server cannot log onto a secured cluster if the value is set to disabled or blank. If the value is simple, then requests are evaluated for origination from the PDI client tool (Spoon) or from the Pentaho Server. If the request comes from a client tool, then Kerberos authentication is used; if the request comes from the Pentaho Server, then the request is evaluated to see whether the service component supports secure impersonation. If the component does not support secure impersonation, the request uses Kerberos authentication. If the component supports secure impersonation, then the request will use secure impersonation.

When impersonation is successful, the Pentaho Server log will report

Use secure impersonation with a cluster

You can establish secure impersonation depending on the options you select when creating or editing a named connection as you connect to a cluster with the PDI client. If your environment requires advanced settings, your server is on Windows, or you are using a Cloudera Impala database, See Manual and advanced secure impersonation configuration

How to enable Kerberos authentication

The following articles explain how to use Kerberos with different components.

Set up Kerberos for Pentaho

Set up Kerberos for Pentaho to connect to a secure Big Data cluster.

Use Kerberos with MongoDB

Configure Kerberos authentication to access MongoDB.

Pentaho and CDP security

The following systems are supported for Kerberos and Knox when using Pentaho with a secured CDP cluster:

| System | Kerberos | Knox |

| HDFS | Supported | Supported |

| Avro | Supported | Supported |

| OFC | Supported | Not supported |

| Parquet | Supported | Not supported |

| YARN | Supported | Not supported |

| YARN-based Pentaho MapReduce (PMR) | Supported* | Not supported |

| Pig | Supported | Not supported |

| Sqoop | Supported | Not supported |

| Hive | Supported | Supported |

| YARN-based HBase | Supported | Supported |

| YARN-based Hadoop job executor | Supported | Not supported |

| Oozie | Not supported | Supported |

| Impala | Supported | Supported |

| Spark submit | Supported | Not supported |

| * You must have enough permission to write to the /opt directory in the HDFS root directory to run Pentaho MapReduce in the CDP Public cloud with Kerberos. | ||

Use of Knox as a gateway for CDP and Pentaho

If you are also using Knox as a gateway security tool, see Use Knox to access CDP for details.