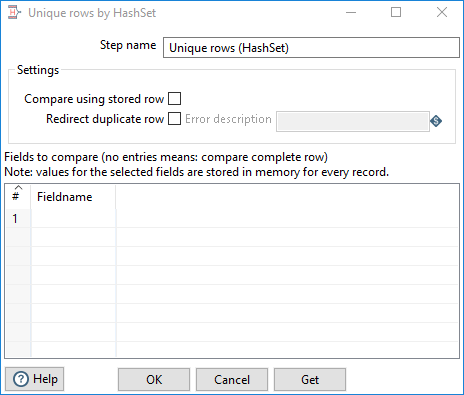

Unique Rows (HashSet)

The Unique Rows (HashSet) step removes duplicate rows and filters only the unique rows as input data for the step.

This step differs from the Unique Rows transformation step by keeping track of the duplicate rows in memory and does not require a sorted input to process duplicate rows.

NoteBecause of memory allocation issues, this step is intended for non-client machines. The required amount of memory and hardware will vary based on the size of the data you are processing.

General

The Unique Rows (HashSet) step requires you to define parameters for your output and how you want to sort and track duplicate rows.

Enter the following information for the Step name field:

- Step name: Specify the unique name of the transformation on the canvas. You can customize the name or leave it as the default

Settings

The Unique Rows (HashSet) step requires definitions for the following options and parameters:

| Option | Description |

| Compare using stored row values | Select this option to store values for the selected fields in memory for every record. Storing row values requires more memory, but it prevents possible false positives if there are hash collisions. |

| Redirect duplicate row | Select this option to process duplicate rows as an error and redirect them to the error stream of the step. If you do not select this option, the duplicate rows are deleted. |

| Error description | Specify the error handling description that displays when the step detects duplicate rows. This description is only available when Redirect duplicate row is selected. |

| Fields to compare table |

Specify the field names for which you want to find unique values. -OR- Select Get to insert all the fields from the input stream. |