Data lineage

Pentaho offers you the ability to visualize the end-to-end flow of your data across PDI transformations and jobs, providing you with valuable insights to help you maintain meaningful data. This ability to track your data from source systems to target applications allows you take advantage of third-party tools, such as Meta Integration Technology (MITI) and yEd, to track and view specific data.

Once lineage tracking is enabled, PDI will generate a GraphML file every time you run a transformation. You can then open this file using a third-party tool, such as yEd, to view a tree diagram of the data. By parsing through and teasing out the different parts of the graph, you can gain an end-to-end view into a specific element of data from origin to target. This ability can aid you in both data lineage and impact analysis:

- Data lineage provides the ability to discover the origins of an element of data and describes the sequence of jobs and transformations which have occurred up to the point of the request for the lineage information.

- Impact analysis is the reverse flow of information which can be used to trace the use and consumption of a data item, typically for the purpose of managing change or assessing and auditing access.

Sample use cases

Data lineage and impact analysis can be applicable in several ways.

As an ETL Developer:

- There are changes in my source system, such as fields which are added, deleted and renamed. What parts of my ETL processes need to adapt? (Impact Analysis)

- I need additional information in my target system, such as for reports. What sources are can provide this additional information? (Data Lineage)

As a Data Steward:

- There is a need for auditability and transparency to determine where data is coming from. A global, company-wide, metadata repository needs data lineage information from different systems and applications, i.e. very fine-grained metadata.

- What elements (fields, tables, etc.) in my ETL processes are never used? How many times is a specific element used in some or all of my ETL processes?

As a Report/Business User:

- Is my data accurate?

- I want to find reports which include specific information from a source, such as a field. This process is "data discovery." For example, are there any data sources which include sales and gender? Are there any reports which include sales and zip codes?

As a Troubleshooting Operator:

- The numbers in the report are wrong (or supposed to be wrong). What processes (transformations, jobs) are involved to help me determine where these numbers are coming from?

- A job or transformation did not finish successfully. What target tables and fields are affected which are used in the reports?

As an Administrator:

- For documentation and auditing purposes, I want to have a report on external sources and target fields, tables, and databases of my ETL processes. I need the data for a specific date and version.

- To ensure compliance, I want to validate naming conventions of artifacts (fields, tables, etc.)

- For integration into third-party data lineage tools, I want a flexible way of exporting the collected data lineage information.

Architecture

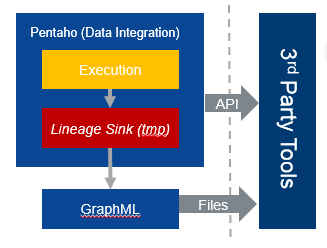

Pentaho's data lineage capabilities allow you to take advantage of tools from Metadata Integration Technology (MITI). If you use a lot of different systems and applications, you can track and visualize specific data across these systems using Pentaho lineage capabilities and third-party tools such as MITI and yEd.

Setup

Modify …\system\karaf\etc\pentaho.metaverse.cfg (Client & Pentaho Server when needed):

- You need to enable lineage explicitly by setting lineage.execution.runtime = on

- Modify the default folder for lineage GraphML files accordingly: lineage.execution.output.folder=./pentaho-lineage-output

- Set lineage.execution.generation.strategy = latest (by default)

After the execution of a job or transformation, the GraphML files are generated in the defined folder.

API

It is also possible to access the GraphML information via a Pentaho Server API. There are REST endpoints available to retrieve the lineage related artifacts.

Below are some example curl commands which exercise the REST endpoints available on the Pentaho Server. These calls use basic authentication. For more information on the various ways to authenticate with the Pentaho Server, see Authenticate with the server before making service calls.

For more detailed information about the REST endpoints available, you can go to the Pentaho Wiki to view the attached Enunciate file.

Get all lineage related artifacts

curl --header "Authorization: Basic YWRtaW46cGFzc3dvcmQ=" http://localhost:8080/pentaho/osgi/cxf/lineage/api/download/allGet all lineage from a given date forward

curl --header "Authorization: Basic YWRtaW46cGFzc3dvcmQ=" http://localhost:8080/pentaho/osgi/cxf/lineage/api/download/all/20150706Get all lineage between 2 dates

curl --header "Authorization: Basic YWRtaW46cGFzc3dvcmQ=" http://localhost:8080/pentaho/osgi/cxf/lineage/api/download/all/20150101/20150706Get all of the lineage artifacts for a specific file in the DI repo

curl --request POST --header "Content-Type: application/json" --header "Authorization: Basic YWRtaW46cGFzc3dvcmQ=" --data '{"path": "/LOCAL DI REPO/home/admin/dataGrid-dummy"}' http://localhost:8080/pentaho/osgi/cxf/lineage/api/download/fileGet all lineage related artifacts for a specific file in the DI repo between 2 dates

curl --request POST --header "Content-Type: application/json" --header "Authorization: Basic YWRtaW46cGFzc3dvcmQ=" --data '{"path": "/LOCAL DI REPO/home/admin/dataGrid-dummy"}' http://localhost:8080/pentaho/osgi/cxf/lineage/api/download/file/20150701/20150707Invalid date request

curl --header "Authorization: Basic YWRtaW46cGFzc3dvcmQ=" http://localhost:8080/pentaho/osgi/cxf/lineage/api/download/all/20159999

Steps and entries with custom data lineage analyzers

The following table lists the PDI steps and job entries with custom analyzers that can generate detailed data lineage information about their function.

| Step / Entry | Version Introduced | Data Lineage Description |

| AMQP Consumer | 8.3 | Creates a resource node for the AMQP server and connects values from AMQP to the child transformation. |

| AMQP Producer | 8.3 | Creates a resource node for the AMQP server and connects outgoing values from the step. |

| Calculator | 6.0 | Adds an operations property to the derived field describing the calculation and displays field nodes. |

| Copy Rows to Result | 6.0 | Uses the default custom step analyzer. |

| CSV File Input | 6.0 | Adds a file resource node displaying the input file path. |

| ETL metadata injection | 8.2 | Adds step property nodes for fields being injected, transformation nodes for injection target step, step node properties for field mappings, as well as nodes and properties representing the related child transformation. |

| Filter Rows | 6.0 | Adds the filter condition to the operations property on the step node and displays fields used in the filter. |

| Fixed File Input | 6.0 | Adds the file resource node displaying the input file path. |

| Get Data from XML | 6.0 | Adds file resource nodes for XML input sources. Adds field metadata to field nodes. |

| Group By | 6.0 | Adds grouping information to the operations field of the output group node along with the input/output field nodes. |

| Hadoop File Input | 8.2 | Adds step related metadata to the root node as properties. Adds resource nodes for the cluster. |

| Hadoop File Output | 8.2 | Adds step related metadata to the root node as properties. Adds resource node for the cluster. |

| HTTP client | 6.0 | Adds field nodes for URLs, parameters, and headers. |

| HTTP Post | 6.0 | Adds field nodes for URLs, parameters, and headers. |

| Job Executor | 6.0 | Sets the job path on the job executor node, adds a link to the job node, and tracks the output fields. |

| JMS Consumer | 8.2 | Adds nodes and step properties for the JMS server, destination, queue, message field, as well as nodes and properties representing the related child transformation. |

| JMS Producer | 8.2 | Adds nodes for the JMS server, destination, queue, message field, as well as properties to the step node. |

| JSON Input | 8.1 | Adds the file resource node for the source JSON file, along with metadata on the root node as properties. |

| JSON Output | 8.1 | Adds the file resource node for the source JSON file, along with metadata on the root node as properties. |

| Kafka Consumer | 8.3 | Creates a resource node for the Kafka server and connects values from Kafka to the child transformation. |

| Kafka Producer | 8.3 | Creates a resource node for the Kafka server and connects outgoing values from the step. |

| Kinesis Consumer | 8.3 | Creates a resource node for Kinesis and connects values from Kinesis to the child transformation. |

| Kinesis Producer | 8.3 | Creates a resource node for Kinesis and connects outgoing values from the step. |

| Mapping | 8.2 | Adds information about inputs and outputs and their mappings, source and target step names to the step node properties, as well as nodes and properties representing the related child transformation. |

| Merge Join | 6.0 | Sets the step properties (for example, the step keys or the join type) on the JSON input node and adds a link between the fields being merged in the source steps. Notates collision-based renames on the field nodes. |

| Microsoft Excel Input | 6.0 | Adds the file resource node displaying the input file path. |

| Microsoft Excel Output | 6.0 | Adds the file resource node displaying the output file path. |

| MongoDB Input | 6.0 | Sets node properties for the output JSON, collection, the query and its characteristics, tag, and preference information. Sets properties on the field nodes related to the JSON path and its location in the source data. |

| MQTT Consumer | 8.3 | Creates a resource node for the MQTT server and connects values from MQTT to the child transformation. |

| MQTT Producer | 8.3 | Creates a resource node for the MQTT server and connects outgoing values from the step. |

| Number Range | 6.0 | Populates the operations field on the node with the specified range rules. |

| Replace in String | 6.0 | Adds the details of the string replacement that was performed on a field to the field node's operations property. |

| REST Client | 6.0 | Adds field nodes for URL, method, body, parameters, and headers. Adds resource inputs for the endpoint metadata coming from input step rows. |

| Select Values | 6.0 | Tracks fields being altered by the step and adds the list of changes to the operations field of the node. |

| Simple Mapping (sub-transformation) | 8.2 | Adds information about inputs and outputs and their mappings to the step node properties, as well as nodes and properties representing the related child transformation. |

| Single Threader | 8.2 | Includes information about injector step, retrieval step, and sub-transformation in the step node properties, as well as nodes and properties representing the related child transformation. |

| Split Fields | 6.0 | Adds the delimiter and enclosure as node properties. Displays metadata in the derived field operation property field that denotes the token index position. |

| Stream Lookup | 6.0 | Adds links from the input lookup nodes that will be used by the stream lookup step. Assigns incoming field nodes to the originating steps. |

| String Operations | 6.0 | Adds the string operations performed on a field to the field node's operations property. |

| Strings Cut | 6.0 | Adds the details of the string cut performed on a field to the field node's operations property. |

| Table Input | 6.0 | Creates a node for the database table and a resource node for the database connection. |

| Table Output | 6.0 | Creates a node for the database output table and a resource node for the database connection. |

| Text File Input | 6.0 | Adds the resource node for the input file. |

| Text File Output | 6.0 | Adds the resource node for the output file. |

| Transformation Executor | 6.0 | Adds a node for the child sub-transformation, and links it to the step node. Links the result fields from the sub-transformation node to the fields of the output node and the input fields to the sub-transformation field nodes. Links the fields it generates to the field created by the step if the sub-transformation has any RowToResult steps. |

| Value Mapper | 6.0 | Adds mapping data onto the step node in the operations property |

| XML Output | 6.0 | Adds a resource node for the XML output file. Adds field output nodes linked from the step to the resource node. |

Advanced topic

Understand the capabilities of metadata lineage for the Pentaho universe.