Virtual file system browser

This section describes the use of the virtual file system (VFS) browser for transformation steps and job entries.

Using the virtual file system browser in PDI

Perform the following steps to access your files with the VFS browser:

Procedure

Select in the PDI client to open the VFS browser.

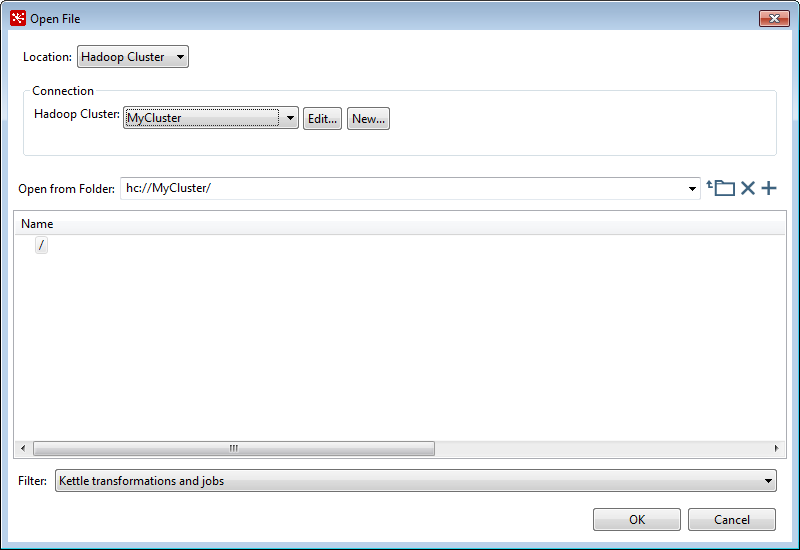

The Open File dialog box opens.

In the Location field, select the type of file system. The following file systems are supported.

- Local: Opens files on your local machine. Use the folders in the Name panel of the Open File dialog box to select a resource.

- Hadoop Cluster: Opens files on any Hadoop cluster except S3. Click the Hadoop Cluster drop-down box to select your cluster, then the resource you want to access.

- S3: Simple Storage Service (S3) accesses the resources on Amazon Web Services. For instructions on setting up AWS credentials, see

- HDFS: Opens files on any Hadoop distributed file system except MapR. Select your desired cluster for the Hadoop Cluster option, then select the resource you want to access.

- MapRFS: Opens files on the MapR file system. Use the folders in the Name panel of the Open File dialog box to select a MapR resource.

- Google Cloud Storage: Opens files on the Google Cloud Storage file system.

- Google Drive: Opens files on the Google file system. You must configure PDI to access the Google file system. See Access to a Google Drive for more information.

- HCP: Opens files on the Hitachi Content Platform. You must configure HCP and PDI before accessing the platform. See Access to HCP for more information.

- Snowflake Staging: Opens files from a staging area used by Snowflake to load files.

In the Open from Folder field, enter the VFS URL.

The following addresses are VFS URL examples for the Open from Folder field:

- Local:

ftp://userID:password@ftp.myhost.com/path_to/file.txt - S3:

s3n://s3n/(s3_bucket_name)/(absolute_path_to_file) - HDFS:

hdfs://myusername:mypassword@mynamenode:port/path

- Local:

Access to a Google Drive

Procedure

Turn on the Google Drive API. Follow the "Step 1" procedure in the article "Build your first Drive app (Java)" located in Google Drive APIs documentation.

The credentials.json file is created.Rename the credentials.json file to client_secret.json. Copy the renamed file into the data-integration/plugins/pentaho-googledrive-vfs/credentials directory.

Restart PDI. The Google Drive option will not appear as a Location until you copy the client_secret.json file into the credentials directory and restart PDI.

Select Google Drive as your Location in the VFS browser. You are prompted to log in to your Google account.

Enter you Google account credentials and log in. The Google Drive permission screen displays.

Click Allow to access your Google Drive Resources.

Results

Access to Google Cloud

Perform the following steps to set up your system to use Google Cloud storage:

Procedure

Download the service account credentials file that you have created using the Google API Console to your local machine.

Create a new system environmental variable on your operating system named GOOGLE_APPLICATION_CREDENTIALS.

Set the path to the downloaded JSON service account credentials file as the value of the GOOGLE_APPLICATION_CREDENTIALS variable.

Results

Access to HCP

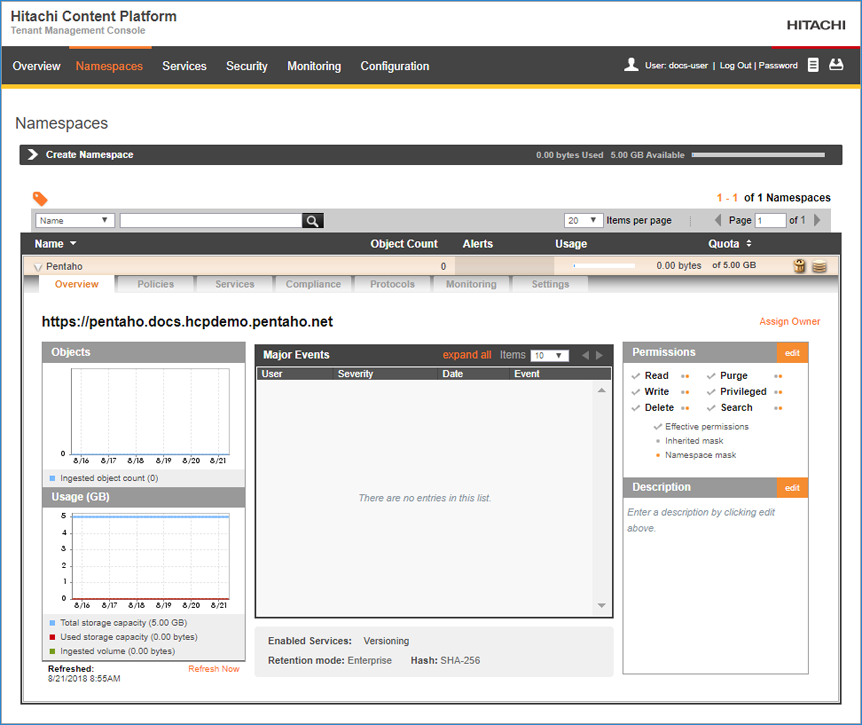

Hitachi Content Platform (HCP) is a distributed storage system that can be used with the VFS browser.

Within HCP, access control lists (ACLs) grant the user privileges to perform a variety of file operations. Namespaces, owned and managed by tenants, are used for logical groupings, access and permissions, as well as object metadata such as retention and shred settings. For more information about HCP, see the Introduction to Hitachi Content Platform.

Set up access to HCP

Procedure

Log on to the HCP Tenant Management Console.

Click Namespaces and then select the Name you want to configure.

In the Protocols tab, click HTTP(S), and verify Enable HTTPS and Enable REST API with Authenticated access only are selected.

In the Settings tab, select ACLs.

Select the Enable ACLs check box and, when prompted, click Enable ACLs to confirm.

Results

Set up HCP credentials

Procedure

Depending on the operating system, create the following subdirectory in the user’s home directory:

- Linux: ~/.hcp/

- Windows: C:\Users\username\.hcp\

Create a file named credentials and save it to the \.hcp directory.

Open the credentials file then add the parameters and values shown below:

[default] hcp_username=[username] hcp_password=[password] accept_self_signed_certificates=false

Insert the HCP namespace username and password, and change accept_self_signed_certificates to true if you want to enable a security bypass.

NoteYou can also use obfuscated or encrypted usernames and passwords.Save and close the file.

For the Pentaho Server setup, stop and start the server.

Results

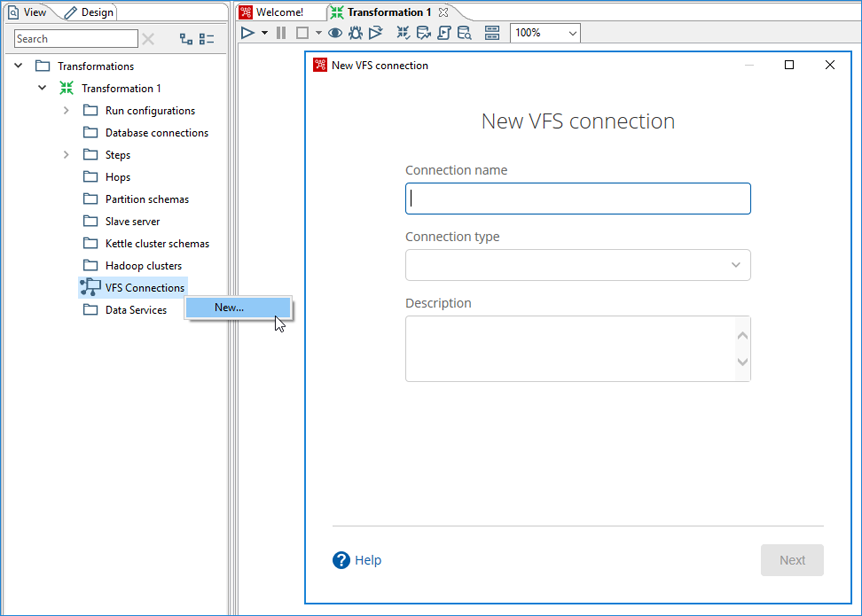

Set up a VFS connection in PDI

After you create a new VFS connection, you can use it with select PDI steps and entries that use the VFS browser. The VFS connection is saved in the repository. If you are not connected to a repository, the connection is saved locally on the machine where it was created.

Perform the following steps to set up a VFS connection in PDI:

Procedure

Start the PDI client (Spoon) and create a new transformation or job.

In the View tab of the Explorer pane, right-click on the VFS Connections folder, and then click New.

The New VFS connection dialog box opens.

In the Connection name field, enter a name that uniquely describes this connection.

The name can contain spaces, but it cannot include special characters, such as#,$, and%.In the Connection type field, select Snowflake Staging, HCP, or Google Cloud Storage from the drop-down list.

(Optional) Enter a description for your connection in the Description field.

Click Next.

On the Connection Details page, enter the information according to your connection type.

(Optional) Click Test to verify your connection.

Click Next to view the connection summary, then Finish to complete the setup.

Results

Edit a VFS connection

Procedure

Right-click the VFS Connections folder and select Edit.

When the Edit VFS Connection dialog box opens, select the Pencil icon next to the section you want to edit.

Delete a VFS connection

Procedure

Right-click the VFS Connections folder.

Select Delete, then Yes, Delete.

Results

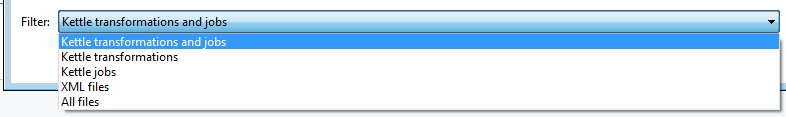

Add and delete folders or files

You can also use the VFS browser to delete files or folders on your file system. A default filter is applied so that initially Kettle transformation and job files display. To view other files, click the Filter drop-down menu and select the type of file you want to select. Once you have selected the file or folder you want to delete, click the X icon in the upper-right corner of the VFS browser to delete your selection. If you want to create a new folder, click the Plus Sign icon in the upper-right corner of the VFS browser and enter your new folder name, and click OK.

Supported steps and entries

Supported transformation steps and job entries open the VFS browser instead of the traditional File Open dialog box. With the VFS browser, you specify a VFS URL instead of a file path to access those resources.

The following steps and entries support the VFS browser:

- Amazon EMR Job Executor (introduced in v.9.0)

- Amazon Hive Job Executor (introduced in v.9.0)

- AMQP Consumer (introduced in v.9.0)

- Avro Input (introduced in v.8.3)

- Avro Output (introduced in v.8.3)

- ETL metadata injection

- File Exists (Job Entry)

- Hadoop Copy Files

- Hadoop File Input

- Hadoop File Output

- JMS Consumer (introduced in v.9.0)

- Job Executor (introduced in v.9.0)

- Kafka Consumer (introduced in v.9.0)

- Kinesis Consumer (introduced in v.9.0)

- Mapping (sub-transformation)

- MQTT Consumer (introduced in v.9.0)

- ORC Input (introduced in v.8.3)

- ORC Output (introduced in v.8.3)

- Parquet Input (introduced in v.8.3)

- Parquet Output (introduced in v.8.3)

- Oozie Job Executor (introduced in v.9.0)

- Simple Mapping (introduced in v.9.0)

- Single Threader (introduced in v.9.0)

- Sqoop Export (introduced in v.9.0)

- Sqoop Import (introduced in v.9.0)

- Transformation Executor (introduced in v.9.0)

- Weka Scoring (introduced in v.9.0)

Configure VFS options

The VFS browser can be configured to set variables as parameters for use at runtime. A VFS Configuration Sample.ktr sample transformation containing some examples of the parameters you can set is located in the data-integration/samples/transformations directory. For more information on setting variables, see Specifying VFS properties as parameters. For an example of configuring an SFTP VFS connection, see Configure SFTP VFS.