Work with jobs

In the PDI client (Spoon), you can develop jobs to orchestrate your ETL activities. The entries used in your jobs define the individual ETL elements, such as transformations, applied to your data. The jobs containing your entries are stored in .kjb files. You can access these .kjb files through the PDI client.

Open a job

The method you use to open an existing job depends on if you are using PDI locally on your machine or if you are connected to a repository. If you are connected to a repository, you are remotely accessing your file on the Pentaho Server. Optionally, you can open a job on a Virtual File System (VFS).

If you recently had a file open, you can also use .

On your local machine

Procedure

-

In the PDI client, perform one of the following actions:

- Select .

- Click the Open file icon in the toolbar.

- Click the OPEN Files tile from the Welcome screen.

- Hold down the CTRLO keys.

-

Select the file from the Open window, then click Open.

NoteBy default, the folder from where the last file was accessed is opened.

Results

In the Pentaho Repository

Procedure

-

Verify that you are connected to a repository, which establishes remote access to the Pentaho Server.

-

In the PDI client, perform one of the following actions to access the Open repository browser window:

- Select

- Click the Open file icon in the toolbar.

- Hold down the CTRLO keys.

NoteBy default, the folder from where the last file was accessed is opened.

-

To use a recently opened files, use the Recents option to navigate to your job.

-

Use either the search box to find your job, or use the left panel to navigate to a repository folder containing your job.

NoteIf the PDI is already connected to the Pentaho Repository, then only the Recents and Pentaho Repository options appear in the left pane. If the PDI is not connected to a Pentaho Repository, then the following options appear in the left pane:- Recents

- Local

- VFS Connections

- Hadoop Clusters

-

If you are not connected to Pentaho Repository, then perform one of the following actions to access the job:

- Double-click on your job.

- Select it and press the Enter key.

- Select it and click Open.

-

If you are connected to the Pentaho Repository, click Open to access the job.

Results

On Virtual File Systems

From the menu bar in the PDI client, select to open a PDI job on a Virtual File System (VFS). See Connecting to Virtual File Systems for details.

Rename a folder

You can rename a folder from the Open. You can rename a folder or file only if you are not connected to the Pentaho Repository.

Procedure

-

Select a folder or file in the Open window.

-

Right-click on it.

-

Select the Rename option from the context menu to rename it.

Save a job

The method you use to save a job depends on if you are using PDI locally on your machine or if you are connected to a repository. If you are connected to a repository, you are remotely saving your file on the Pentaho Server. Optionally, you can save a job on a Virtual File System (VFS).

On your local machine

Procedure

-

In the PDI client, perform one of the following actions:

- Select or .

- Click the Save current file icon in the toolbar.

- Hold down the CTRLS keys.

The Save As window appears.

-

Specify the job's name in the window and select the location.

By default, the folder from where the last file is accessed is opened.

NoteThe file types allowed are .ktr or.kjb. -

Click Save.

Results

In the Pentaho Repository

Procedure

-

Verify that you are connected to a repository, which establishes remote access to the Pentaho Server.

-

In the PDI client, perform one of the following actions:

- Select or .

- Click the Save current file icon in the toolbar.

- Hold down the CTRLS keys.

-

Navigate to the repository folder where you want to save your job.

By default, the folder from where the last file was accessed is opened.

-

Specify the job's name in the File name field.

NoteThe file types allowed are .ktr or.kjb. -

Click Save.

Results

On Virtual File Systems

From the menu bar in the PDI client, select to save a PDI job on a Virtual File System (VFS). See Connecting to Virtual File Systems for details.

Adjust job properties

You can adjust the parameters, logging options, settings, and transactions for jobs. To view the job properties, click CTRLJ or right-click on the canvas and select Properties from the menu that appears.

Run your job

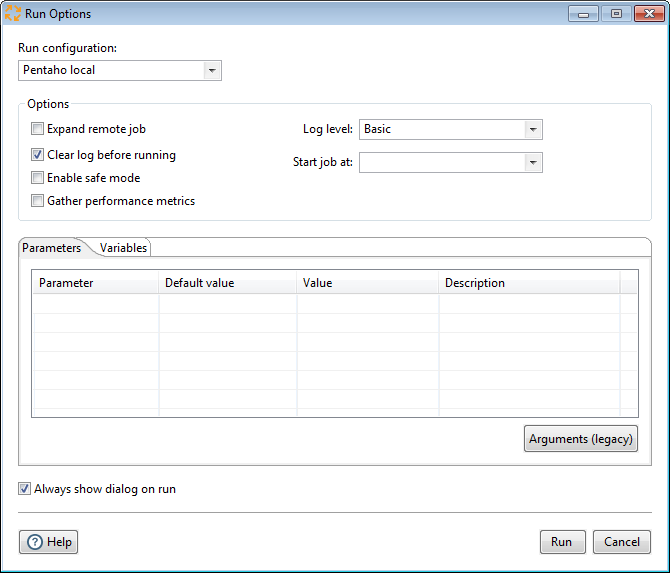

After creating a job to orchestrate your ETL activities (such as your transformations), you should run it in the PDI client to test how it performs in various scenarios. With the Run Options window, you can apply and adjust different run configurations, options, parameters, and variables. By defining multiple run configurations, you have a choice of running your job locally or on a server using the Pentaho engine.

When you are ready to run your job, you can perform any of the following actions to access the Run Options window:

- Click the Run icon on the toolbar.

- Select Run from the Action menu.

- Press F9.

The Run Options window appears.

In the Run Options window, you can specify a Run configuration to define whether the job runs locally, on the Pentaho Server, or on a slave (remote) server. To set up run configurations, see Run Configurations.

The Run Options window also lets you specify logging and other options, or experiment by passing temporary values for defined parameters and variables during each iterative run.

Always show dialog on run is set by default. You can deselect this option if you want to use the same run options every time you execute your job. After you have selected to not Always show dialog on run, you can access it again through the dropdown menu next to the Run icon in the toolbar, through the Action main menu, or by pressing F8.

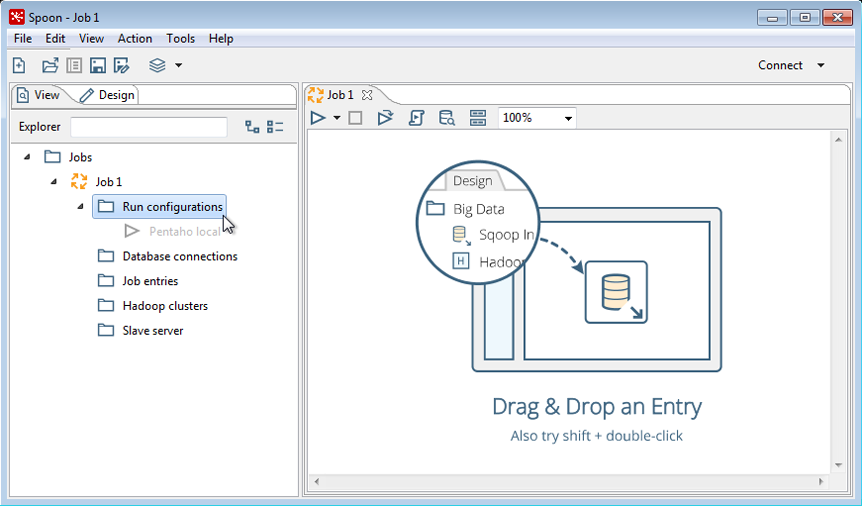

Run configurations

Some ETL activities are lightweight, such as loading in a small text file to write out to a database or filtering a few rows to trim down your results. For these activities, you can run your job locally using the default Pentaho engine. Some ETL activities are more demanding, containing many entries and steps calling other entries and steps or a network of modules. For these activities, you can set up a separate Pentaho Server dedicated for running jobs and transformations using the Pentaho engine.

You can create or edit these configurations through Run configurations in the View tab as shown below:

To create a new run configuration, right-click on Run configurations and select New. To edit or delete a run configuration, right-click on an existing configuration.

Selecting New or Edit opens the Run configuration dialog box that contains the following fields:

| Field | Description |

| Name | Specify the name of the run configuration. |

| Description | Optionally, specify details of your configuration. |

Pentaho engine

The Pentaho engine does not execute sub-transformations or sub-jobs when you select the Pentaho server or Slave server option. If you want to run a sub-transformation on the same server where your parent job runs, select Local for the Run Configuration type.

The Settings section of the Run configuration dialog box contains the following options when Pentaho is selected as the Engine for running a job:

| Option | Description |

| Local | Select this option to use the Pentaho engine to run a job on your local machine. |

| Pentaho Server | Select this option to run your job on the Pentaho Server. This option only appears if you are connected to a Pentaho Repository. |

| Slave server | Select this option to send your job to a slave or remote server. |

| Location | If you select Slave server, specify the location of your remote server. |

| Send resources to the server | If you specified a Location for a server, select to send your job to the specified server before running it. Select this option to run the job locally on the server. Any related resources, such as other referenced files, are also included in the information sent to the server. |

Options

Errors, warnings, and other information generated as the job runs are stored in logs. You can specify how much information is in a log and whether the log is cleared each time through the Options section of this window. You can also enable safe mode and specify whether PDI should gather performance metrics. Logging and Performance Monitoring describes the logging methods available in PDI.

| Option | Description |

| Clear log before running | Indicates whether to clear all your logs before you run your job. If your log is large, you might need to clear it before the next execution to conserve space. |

| Log level | Specifies how much logging is performed and the amount of information captured:

Debug and Row Level logging levels contain information you may consider too sensitive to be shown. Please consider the sensitivity of your data when selecting these logging levels. Performance Monitoring and Logging describes how best to use these logging methods. |

| Enable safe mode | Checks every row passed through your job and ensure all layouts are identical. If a row does not have the same layout as the first row, an error is generated and reported. |

| Start job at | Specifies an alternative starting entry for your job. All the current entries in your job are listed as options in the dropdown menu. |

| Gather performance metrics | Monitors the performance of your job execution through these metrics. Using Performance Graphs shows how to visually analyze these metrics. |

Parameters and variables

You can temporarily modify parameters and variables for each execution of your job to experimentally determine their best values. The values you enter into these tables are only used when you run the job from the Run Options window. The values you originally defined for these parameters and variables are not permanently changed by the values you specify in these tables.

| Value Type | Description |

| Parameters | Set parameter values related to your job during runtime. A parameter is a local variable. The parameters you define while creating your job are shown in the table under the Parameters tab.

|

| Variables | Set values for user-defined and environment variables related to your job during runtime. |

Stop your job

There are two different methods you can use to stop jobs running in the PDI client. The method you use depends on the processing requirements of your ETL activity. Most jobs can be stopped immediately without concern. However, since some jobs are ingesting records using messaging or streaming data, such incoming data may need to be stopped safely so that the potential for data loss is avoided.

To stop a job running in the PDI client:

- Use Stop if your ETL activity should stop processing all data immediately.

- Use Stop input processing if your ETL activity needs to finish any records already initiated or retrieved before stopping.