Using Parquet Input on the Pentaho engine

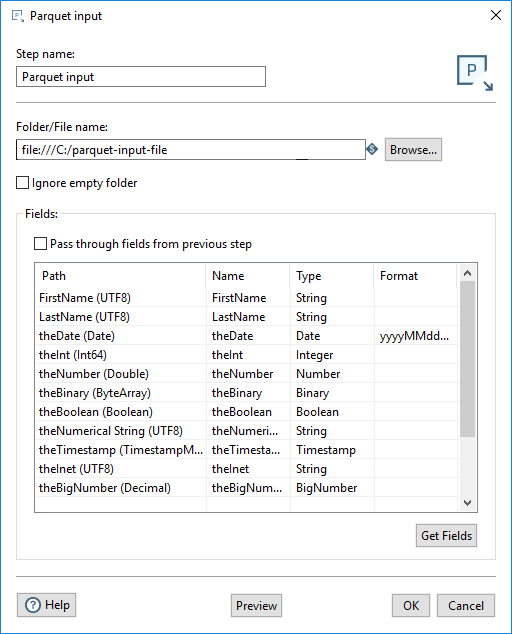

If you are running your transformation on the Pentaho engine, use the following instructions to set up the Parquet Input step.

General

The following fields are general to this transformation step:

| Field | Description |

| Step name | Specify the unique name of the Parquet input step on the canvas. You can customize the name or use the provided default. |

| Folder/File name | Specify the fully qualified URL of the source file or folder name for the input fields. Click Browse to display the Open File window and navigate to the file or folder. For the supported file system types, see Connecting to Virtual File Systems. The Pentaho engine reads a single Parquet file as an input. |

| Ignore empty folder | Select to allow the transformation to proceed when the specified source file is not found in the designated location. If not selected, the specified source file is required in the location for the transformation to proceed. |

Fields

The Fields section contains the following items:

- The Pass through fields from the previous step option reads the fields from the input file without redefining any of the fields.

- The table defines the data about the columns to read from the Parquet file.

The table in the Fields section defines the fields to read as input from the Parquet file, the associated PDI field name, and the data type of the field.

Enter the information for the Parquet input step fields, as shown in the following table:

| Field | Description |

| Path | Specify the name of the field as it will appear in the Parquet data file or files, and the Parquet data type. |

| Name | Specify the name of the input field. |

| Type | Specify the type of the input field. |

| Format | Specify the date format when the Type specified is Date. |

Provide a path to a Parquet data file and click Get Fields. When the fields are retrieved, the Parquet type is converted to an appropriate PDI type, as shown in the table below. You can preview the data in the Parquet file by clicking Preview. You can change the Type by using the Type drop-down or by entering the type manually.

PDI types

The Parquet to PDI data type values are as shown in the table below:

| Parquet Type | PDI Type |

| ByteArray | Binary |

| Boolean | Boolean |

| Double | Number |

| Float | Number |

| FixedLengthByteArray | Binary |

| Decimal | BigNumber |

| Date | Date |

| Enum | String |

| Int8 | Integer |

| Int16 | Integer |

| Int32 | Integer |

| Int64 | Integer |

| Int96 | Timestamp |

| UInt8 | Integer |

| UInt16 | Integer |

| UInt32 | Integer |

| UInt64 | Integer |

| UTF8 | String |

| TimeMillis | Timestamp |

| TimestampMillis | Timestamp |

Metadata injection support

All fields of this step support metadata injection. You can use this step with ETL metadata injection to pass metadata to your transformation at runtime.