Transformation (job entry)

The Transformation entry runs a previously-defined transformation within a job. This entry is the access point from your job to your ETL activity (transformation).

An example of a common job includes getting FTP files, checking the existence of a necessary target database table, running a transformation that populates that table, and emailing an error log if a transformation fails. For this example, the Transformation entry defines which transformation to run to populate the table.

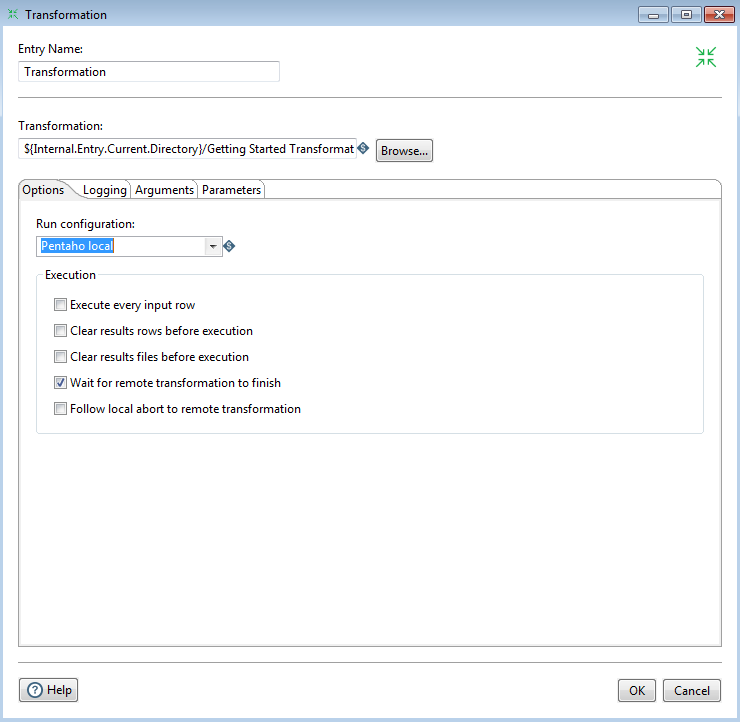

General

Enter the following information in the job entry fields:

| Field | Description |

| Entry Name | Specifies the unique name of the job entry on the canvas. A job entry can be placed on the canvas several times, yet it represents the same job entry. The Entry Name is set to Transformation by default. |

| Transformation | Specify your transformation by entering in its path or clicking Browse.

If you select a transformation that has the same root path as the current transformation, the variable ${Internal.Entry.Current.Directory} will automatically be inserted in place of the common root path. For example, if the current transformation's path is /home/admin/transformation.ktr and you select a transformation in the folder /home/admin/path/sub.ktr than the path will automatically be converted to ${Internal.Entry.Current.Directory}/path/sub.ktr. If you are working with a repository, specify the name of the transformation in your repository. If you are not working with a repository, specify the XML file name of the transformation on your system. Transformations previously specified by reference are automatically converted to be specified by name within the Pentaho Repository. |

Options

The Transformation job entry features several tabs with fields. Each tab is described below.

Options tab

The following table describes available options:

| Option | Description |

| Run Configuration | The transformation can run in different types of environment configurations. Specify a run configuration to control whether the transformation runs on the native Pentaho engine or a Spark client. If you choose the Pentaho engine, you can run the transformation locally, on a remote server, or in a clustered environment. To set up run configurations, see Run configurations . |

| Execute every input row | Runs the transformation once for every input row (looping). |

| Clear results rows before execution | Makes sure the results rows are cleared before the transformation starts. |

| Clear results files before execution | Makes sure the results files are cleared before the transformation starts. |

| Wait for remote transformation to finish | If you selected Server as your environment type, choose this option to block the job until the transformation runs on the server. |

| Follow local abort to remote transformation | If you selected Server as your environment type, choose this option to send the local abort signal remotely. |

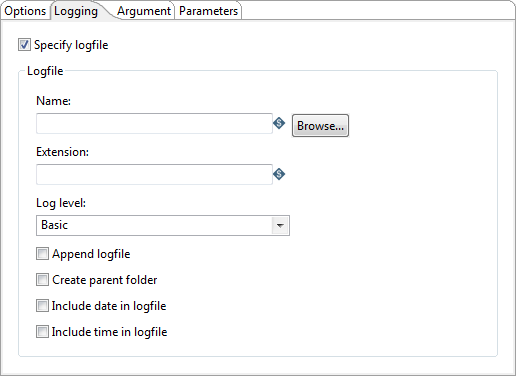

Logging tab

By default, if you do not set logging, PDI will take generated log entries and create a log record inside the job. For example, suppose a job has three transformations to run and you have not set logging. The transformations will not log information to other files, locations, or special configurations. In this instance, the job runs and logs information into its master job log.

In most instances, it is acceptable for logging information to be available in the job log. For example, if you have load dimensions, you want logs for your load dimension runs to display in the job logs. If there are errors in the transformations, they will be displayed in the job logs. However, you want all your log information kept in one place, you must then set up logging.

The following table describes the options for setting up a log file:

| Option | Description |

| Specify logfile | Specifies a separate logging file for running this transformation. |

| Name | Specifies the directory and base name of the log file (C:\logs for example). |

| Extension | Specifies the file name extension (.log or .txt for example). |

| Log level | Specifies the logging level for running the transformation. See Logging and performance monitoring for more details. |

| Append logfile | Appends the logfile as opposed to creating a new one. |

| Create parent folder | Creates a parent folder for the log file if it does not exist. |

| Include date in logfile | Adds the system date to the log file, with the format YYYYMMDD such as (_20051231). |

| Include time in logfile | Adds the system time to the log file, with the format HHMMSS such as (_235959). |

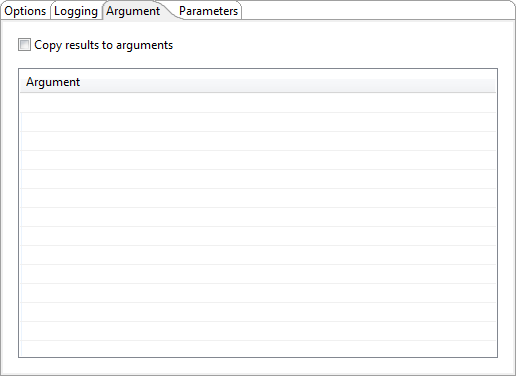

Arguments tab

Enter the following information to pass arguments to the transformation:

| Option | Description |

| Copy results to arguments | Copies the results from a previous transformation as arguments of the transformation using the Copy rows to result step. If the Execute every input row option is selected, then each row is a set of command-line arguments to be passed into the transformation; otherwise, only the first row is used to generate the command line arguments. |

| Argument | Specify which command-line arguments will be passed to the transformation. |

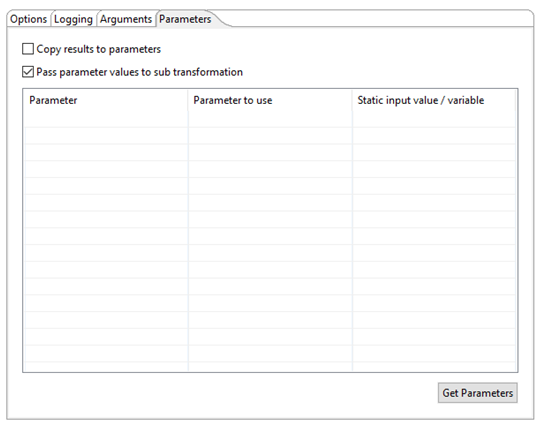

Parameters tab

Enter the following information to pass parameters to the transformation:

| Option | Description |

| Copy results to parameters | Copies the results from a previous transformation as parameters of the transformation using the Copy rows to result step. |

| Pass parameter values to sub transformation | Pass all parameters of the job down to the sub-transformation. |

| Parameter | Specify the parameter name passed to the transformation. |

| Parameter to use | Specify the field of an incoming record from a previous transformation as the parameter.

If you enter a field in Parameter to use then Static input value / variable is disabled. |

| Static input value / variable | Specify transformation parameter values through one of the following methods:

If you enter a value in Static input value / variable, then Parameter to use is disabled. |

| Get Parameters | Get the existing parameters already associated by the transformation. |