Optimize a Pentaho Data Service

As you Test a Pentaho Data Service, you might notice certain bottlenecks, or parts of the transformation that could run more efficiently. If you want to improve the performance of your data service, apply an optimization technique. Some techniques are specifically designed for Pentaho Data Services. See Pentaho Data Integration performance tips to learn about other general design and optimization techniques that can improve the performance of your transformation.

| Optimization Technique | When to Use |

| Service Cache |

For a regular data service only, adjust how long data results are cached. Consider using this technique if either of the following situations apply:

NoteThis optimization technique is not available for a streaming data service. It

will not appear as an optimization tab if Data Service Type

is set to Streaming.

|

| Query Pushdown |

Handle input step queries at the source. Consider using this technique if both of the following situations apply:

|

| Parameter Pushdown |

Handle step queries at the source. Consider using this technique if both of the following situations apply:

|

| Streaming Optimization |

For a streaming data service only, adjust the maximum number of rows and elapsed time to produce a new streaming window for processing. Consider using this technique if you are creating a data service from one of the following streaming data steps:

NoteThis optimization technique is not available for a regular data service. It will

not appear as an optimization tab if Data Service Type is set

to Regular.

|

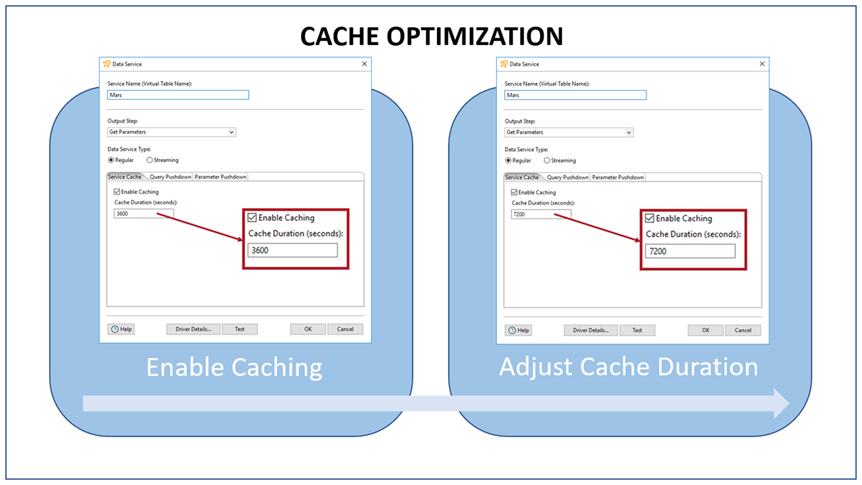

Apply the service cache optimization

This optimization technique stores the Pentaho Data Service in a cache.

By default, caching is enabled and the results are stored for an hour, but you can adjust the cache so that the results of the data service are stored for one second or longer. Caching is a great technique to apply if you have moderately-sized data result sets, and you anticipate follow-on (subsequent) queries.

How the service cache optimization technique works

If you run the data service during the time that the data service results are cached, PDI will run your query against the cached data set instead of running the entire transformation again only if certain conditions are met. These conditions are determined by the other optimization techniques you choose to apply as well as whether the query results are a subset of the cached data set.

Enabling the cache tells PDI to store the results of the data service transformation for the length of time you specify.

If you do not combine the Service Cache optimization with other optimization techniques and

if you query the data service more than once before the cache expires, the query will run

against the cached data set if the cached data set contains all of the matching records in

the original data set. For example, if the initial run of the data service populates the

cache with the results of SELECT * FROM 'employee', a subsequent data

service call that retrieves a subset of the data (e.g. SELECT * FROM 'employee'

WHERE region = "South") will be run against the cached data set. For this to

happen, the original query must have been run against the full data set.

When you run a test in the Test Data Service

window, then adjust the query and run the test again, the query is not run against the

cache. This is because when you run a test, the results only return a certain number of

records (100, 500, or 1000, depending on what you selected for Max

Rows). For example, you could run a test that uses the SELECT * FROM

'employee' query to return the first 100 records from a 5000 record table. The

initial test query would return the first 100 records from the employee table.

Assume that the cached result set consists of records for 50 males and 50

females. If you run a second test, but adjust the query so that you only show records for

females (SELECT * FROM 'employee' WHERE gender="F") running the query

against the cached results would result in only 50 records being returned instead of 100. To

avoid this and to provide more accurate results, PDI runs the transformation

again so that the second test query is run against the table and returns the first 100

matching results.

Adjust the cache duration

Procedure

Click the Service Cache tab in the Data Service window.

Select Enable Caching to ensure the cache is accessible.

Modify the Cache Duration (seconds) field.

Click OK.

Disable the cache

Procedure

Click the Service Cache tab in the Data Service window.

Clear Enable Caching to disable the cache.

Click OK.

Clear the cache

Procedure

Click the Service Cache tab in the Data Service window.

Clear Enable Caching to disable the cache.

Click OK to close the window.

Open the Data Service window again, and click the Service Cache tab.

Select Enable Caching to re-enable the cache.

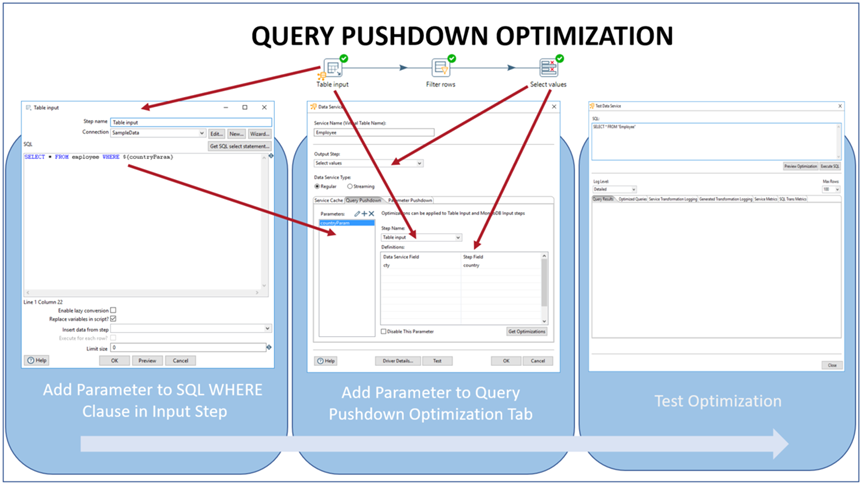

Apply a query pushdown optimization

Use the Query Pushdown optimization technique to

translate the WHERE clause in a SQL query run against a data service to a

corresponding WHERE clause in the Table

Input or MongoDB Input steps. Queries in these

input steps are then filtered down and more efficiently handled at the data source.

How the query pushdown optimization technique works

To apply Query Pushdown optimization, first set the input step optimization values, then add the optimization parameter to the input step query.

The optimization requires the creation of a parameter that takes the place of

the WHERE clause, like the following example: SELECT * FROM

'employee' WHERE ${countryParam}.

If you combine this optimization technique with Service Cache optimization and if you query the data service more than once before the cache expires, the query will run against the cached data set if the cached data set contains all of the matching records in the original data set. Also, when you run a test in the Test Data Service window, then adjust the query and run the test again, the query is not run against the cache. When you run the test, the results only return a certain number of records (100, 500, or 1000, depending on what you selected for Max Rows). For more information on the optimization technique, see the Apply a Service Cache Optimization section.

Add the query pushdown parameter to the Table Input or MongoDB Input steps

Procedure

Create a transformation that has a Table Input or MongoDB Input step.

Run your transformation to ensure it executes properly.

Double-click the input step (Table Input or MongoDB Input) that contains the query you want to optimize.

Add a parameter to the location of the WHERE clause value, like this:

- SQL Query Example:

SELECT * FROM media WHERE ${countryParam} - MongoDB Query Example:

{$match : ${mongoDbParam}}

- SQL Query Example:

Press CTRLSPACE to view the list of parameters. Click on a parameter from the list to add it to the query.

Select the Replace Variables in Script? checkbox.

Click the OK button.

Set up query pushdown parameter optimization

Procedure

Open the Data Service window, then click the Query Pushdown tab.

Click the Plus Sign button near the Parameters label.

In the Create Parameter window add the name of an optimization parameter you created in the input step’s SQL query.

Click OK.

Select the step that contains the parameter from Step Name.

In the Definitions area of the window, enter the Data Service Field and the Step Field you want to parameterize.

- The Data Service Field contains the transformation's name of the field you want to parameterize. The name should be as it appears in the transformation's output field. For example, you might have renamed the cty field from your data source to country. You would enter country in the Data Service Field.

- The Step Field should contain the data source's name of the field you want to parameterize. For example, if you wanted to parameterize the cty field in a MySQL database, enter cty in the Step Field.

Optionally, you can click the Get Optimizations button to automatically generate input step optimizations based on the output fields for the step on which you have created the data service and input fields.

Click the OK button to save and close the window.

Test the optimization.

Publish the Pentaho Data Service.

Disable the query pushdown optimization

To disable this optimization, select Disable an Optimization in the Data Services window.

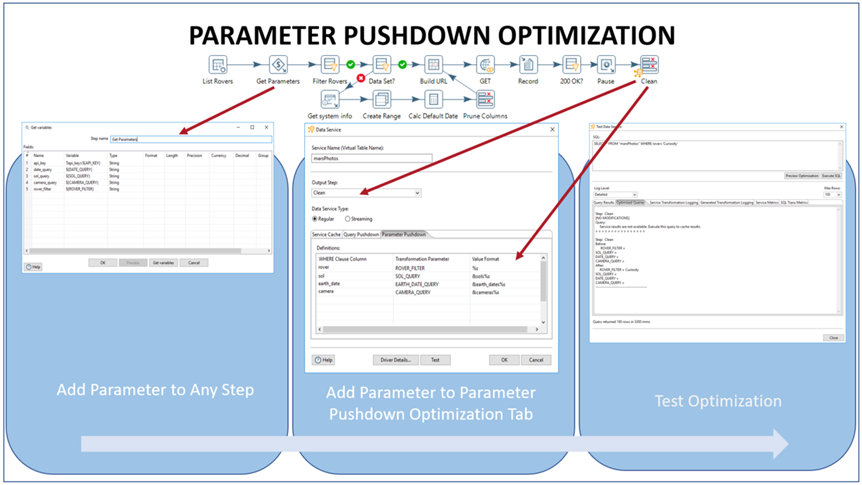

Apply a parameter pushdown optimization

The Parameter Pushdown optimization technique can be applied to any step in the transformation.

Although similar to the Query Pushdown

optimization, Parameter Pushdown optimization differs because it can applied to any step and

it maps a field value to a parameter in a simple WHERE clause in which a

parameter is assigned a specific value using the equal operator like the following example:

WHERE region= "South".

How the parameter pushdown optimization technique works

To set up the parameter pushdown optimization, first set up the optimization, then add the parameter to the transformation step.

If you combine this optimization technique with Service Cache optimization and if you query the data service more than once before the cache expires, the query will run against the cached data set if the cached data set contains all of the matching records in the original data set. Also, when you run a test in in the Test Data Service window, then adjust the query and run the test again, the query is not run against the cache. When you run the test, the results only return a certain number of records (100, 500, or 1000, depending on what you selected for Max Rows). For more information on the optimization technique, see the Apply a Service Cache Optimization section.

Add the parameter pushdown parameter to the step

Procedure

Run your transformation to ensure it executes properly.

Add the parameter you want to optimize to one of the steps. Typically, you would want to specify the parameter in a JSON or REST Client step. If you use a filtered step, you would need to use the Get Variables step before the filtered step.

Click the OK button.

Set up parameter pushdown optimization

Procedure

Open the Data Service window, then click the Parameter Pushdown tab.

In the WHERE Clause Column, click in a cell and enter the Data Service Field name from the existing fields listed. The Data Service Field name is the virtual table field name in your

WHEREclause. Press ENTER. Possible values are defaulted for Transformation Parameter and Value Format.Adjust the name of the Transformation Parameter as needed. The name is something that you create; it must be unique in the data service. Type the name in the Transformation Parameter field or in the window transformation properties. (The parameter is created in the transformation properties or is something you specify in a field).

If needed, add a prefix or suffix to the default

%sin Value Format field. For example, if you want to format a value, consider using[value]=%s. In most cases, the default%sis sufficient formatting.Save the optimization and exit the window by clicking OK.

Apply streaming optimization

This optimization technique limits the batch size used for processing.

The records of the streaming Pentaho Data Service are partitioned into windows (batches) for processing. How the records are batched depends on the window mode you choose. A window can be time-based or row-based. A time-based window is created within a specified interval of time. A row-based window is created per a specified number of rows collected for processing.

How the streaming optimization technique works

You can customize the amount of the records used for processing by specifying a maximum limit for time or rows in the window mode. You can optimize data service processing by specifying a Rows Limit (the maximum number of rows that a window can have). If the value in the Rows Limit is reached, a new window is created. You can also optimize data service processing by specifying a Time Limit (the maximum elapsed time to create a new window).

Adjust the row or time limits

Procedure

Click the Streaming tab in the Data Service window.

Modify either the Rows Limit field or the Time Limit field.

Click OK.