Group By

This step groups rows from a source, based on a specified field or collection of fields. A new row is generated for each group. It can also generate one or more aggregate values for the groups. Common uses are calculating the average sales per product and counting the number of an item you have in stock.

The Group By step is designed for sorted inputs. If your input is not sorted, only double consecutive rows are grouped correctly. If you sort the data outside of PDI, the case sensitivity of the data in the fields may produce unexpected grouping results.

You can use the Memory Group By step to handle non-sorted input.

General

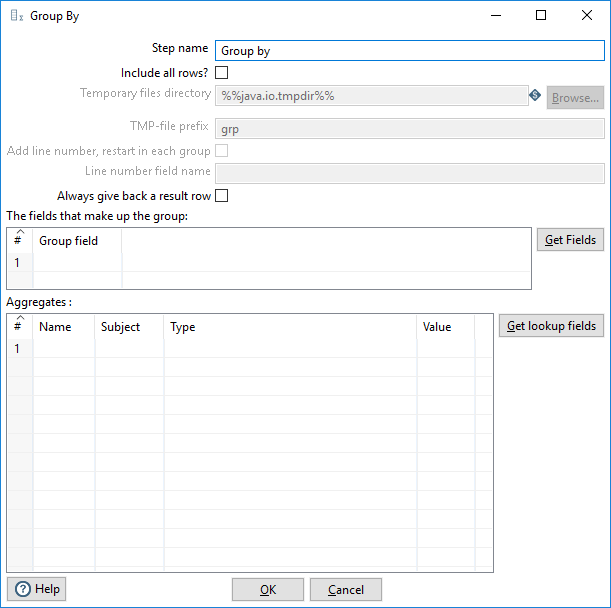

Enter the information in the options as shown in the following table:

| Option | Description |

| Step name | Specifies the unique name of the Group By step on the canvas. You can customize the name or leave it as the default. |

| Include all rows? |

Select this check box if you want to include all rows in the output. Clear this check box if you only want to output the aggregate rows. The following options are not available unless the Include all rows option is selected:

|

| Temporary files directory | Specify the directory where the temporary files are stored. The default is the standard temporary directory for the system. You must specify a directory when the Include all rows option is selected and the number of grouped rows exceeds 5000 rows. |

| TMP-file prefix | Specifies the file prefix for naming temporary files. |

| Add line number, restart in each group | Adds a line number that restarts at 1 in each group. When both Include all rows and this option are selected, all rows are included in the output with a line number for each row. |

| Line number field name | Specifies the name of the field where you want to add line numbers for each new group. |

| Always give back a result row | Select this check box to return a result row, even when there is no input row. When there are no input rows, this option returns a count of zero (0). Clear this check box if you only want to output a result row when there is an input row. |

The fields that make up the group table

Use The fields that make up the group table to specify the fields you want to group. Click Get Fields to add all fields from the input stream(s) to the table. Right-click a row in the table to edit that row or all rows in the table.

Aggregates table

The Aggregates table specifies the group you want to aggregate, the aggregation method, and the name of the resulting new field.

The Aggregates table contains the following columns:

| Column | Description |

| Name | The name of the aggregate field. |

| Subject | The subject on which you want to use an aggregation method. |

| Type |

The aggregate method. The aggregation methods are:

|

| Value | The aggregate value. |

Use Group By with Spark

The following differences occur when the Group By step is used with Spark:

- The field names cannot contain spaces, dashes, or special characters, and must start with a letter.

- The rows are sorted by the grouping fields.

- The Sort step before the Group By step is optional. Existing transformations that contain a Sort step before the Group By step will successfully run.

- The Group By and the Memory Group By steps work the same.

- If you select the Include All Rows option, you must also specify which fields to group in The fields that make up the group table.

- If you select the Include All Rows option, you cannot use the Number of distinct values aggregate type.

- The Temporary files directory and TMP-file prefix options do not apply. Temporary files are not created when run with Spark.

Examples

Examples included in the design-tools\data-integration\samples\transformations directory are:

- Calculate median and percentiles using the group by steps.ktr

- General - Repeat fields - Group by - Denormalize.ktr

- Group By - Calculate standard deviation.ktr

- Group By - include all rows without a grouping.ktr

- Group by - include all rows and calculations .ktr.

Metadata Injection Support

All fields of this step support metadata injection. You can use this step with ETL metadata injection to pass metadata to your transformation at runtime.

The metadata injection values for the aggregation type are:

- SUM

- AVERAGE

- MEDIAN

- PERCENTILE

- MIN

- MAX

- COUNT_ALL

- CONCAT_COMMA

- FIRST

- LAST

- FIRST_INCL_NULL

- LAST_INCL_NULL

- CUM_SUM

- CUM_AVG

- STD_DEV

- CONCAT_STRING

- COUNT_DISTINCT

- COUNT_ANY