Apache Atlas integration

Lumada Data Catalog provides an integration package to share metadata with Apache® Atlas™. For a given resource in Data Catalog, this integration allows users to:

- Push tags to Atlas

- Pull lineage information from Atlas

Configure Atlas integration

Procedure

Download the adapter package.

The package is available through the Hitachi Vantara Lumada and Pentaho Support Portal at https://support.pentaho.com/hc/en-us for supported customers. If you are not a supported customer, contact your Hitachi Vantara sales representative.As the Data Catalog service user, navigate to where you want to extract the adapter.

For example, you can extract it in the Data Catalog Agent installation location.$ su ldcuser $ cd <LDC Agent Dir> $ tar xvf <path to downloaded package>

A new folder is created under <LDC Agent Dir>/adapters/atlas.Modify the configuration properties in atlas-application.properties to match your environment.

<LDC Agent Dir>$ vi adapters/atlas/conf/atlas-application.propertiesModify the following properties:

Property Description Example Value atlas.rest.addressURL including the host and port for the Atlas service. http://127.0.0.1:21000atlas.userAtlas user with the appropriate permissions to perform the import and export operations. admin atlas.passwordPassword corresponding to atlas.user.admin NoteThis password needs to be in clear text. Data Catalog stores it encrypted and decrypts it when connecting to Atlas.atlas.cluster.nameName of the cluster where Atlas resides. CL1 Create an external source using the Data Catalog execution script found under the agent component.

<AGENT-HOME>$ bin/ldc createExternalSource -name <Atlas external source> -type Atlas -configFile adapters/atlas/conf/atlas-application.properties- To delete the external source use the Data Catalog execution script:

<AGENT-HOME>$ bin/ldc deleteExternalSource -name <Atlas external source> -type Atlas -configFile adapters/atlas/conf/atlas-application.properties

- To delete the external source use the Data Catalog execution script:

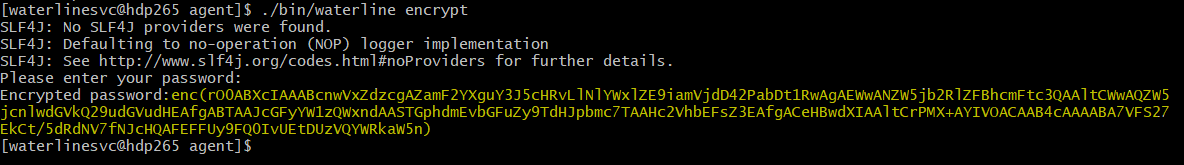

Encrypt the password for Data Catalog user using the following command:

$ <AGENT-HOME> bin/ldc-util encryptAt the Please enter your password prompt, enter your password.

Sample output may look like below:

The screen will display its encrypted version. Save this encrypted password for use in the next steps. NoteBe sure to use the entire encrypted password string, including

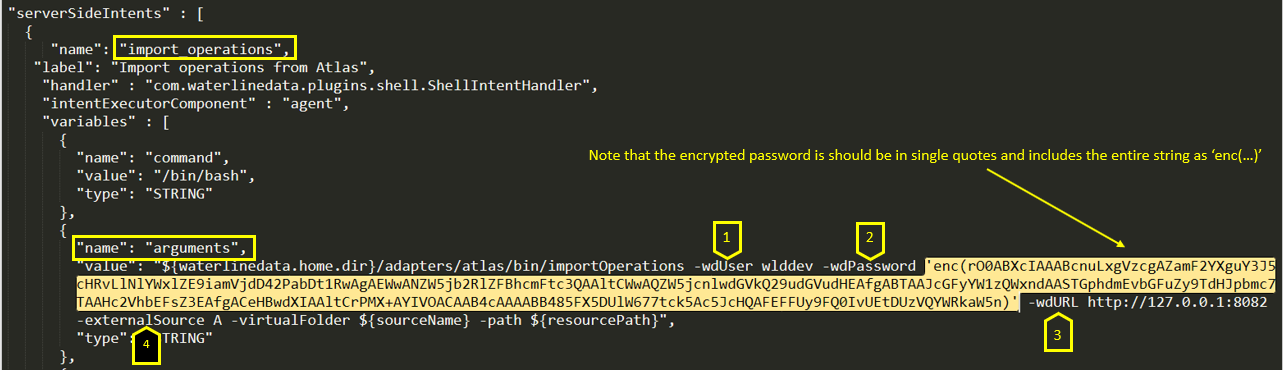

NoteBe sure to use the entire encrypted password string, includingenc(...).Copy the descriptor file <AGENT-HOME>/adapters/atlas/resources/atlas-plugin-descriptor.json to the <App-Server Dir>/ext directory and update the following in the atlas-plugin-descriptor.json file.

Import configuration

Under

import_operations→arguments:-ldcUseris the Data Catalog service user created during installation.-ldcPasswordis the encrypted password obtained in step 6.-ldcURLis the Apache instance URL.-externalSourceis created in step 4.

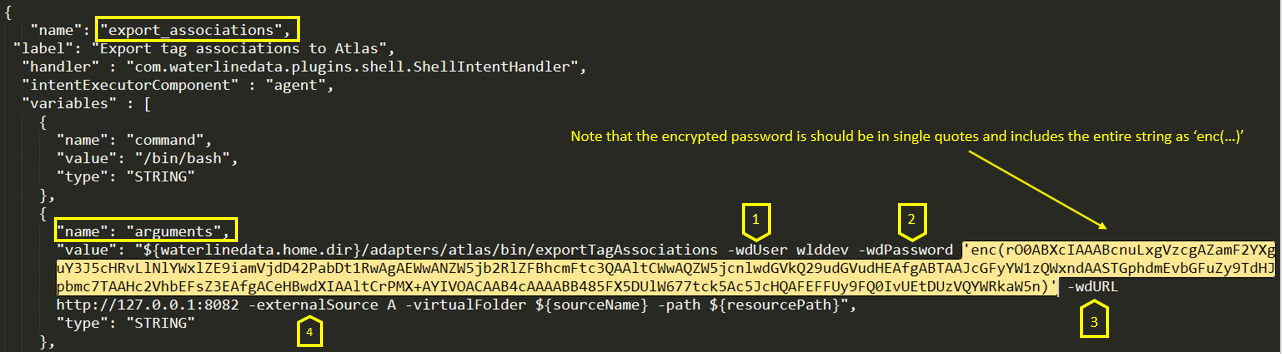

Export configuration

Under

export_associations→arguments:-ldcUseris the waterlinesvc (Data Catalog service user created during installation).-ldcPasswordis the encrypted password obtained in step 6.-ldcURLis the Apache instance URL.-externalSourceis created in step 4.

Update the following new properties in the atlas-plugin-descriptor.json file as and when required.

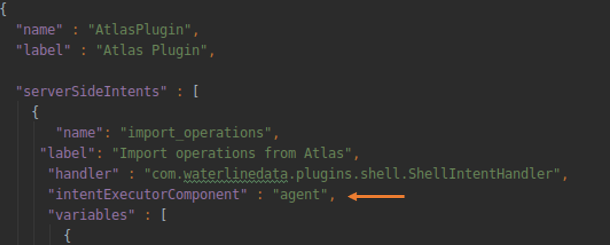

- intentExecutorComponent: This property decided the plugin execution component.

- agent.timeout: This value determines the response time (In seconds) to wait for the UI side response.

When intentExecutorComponent is set to Agent, the user can provide dataSourceIds and virtualFolderIds to allow this functionality enabled in UI for a specific dataSource and virtualFolder.

- dataSourceIds: This is a String array,which expects dataSource key(s).

- virtualFolderIds: This is a String array, which expects virtualFolder key(s).

- intentExecutorComponent: This property decided the plugin execution component.

Restart the application server using the following command:

$ <LDC App-Server Dir> bin/app-server restart

Results

When there is any change in the user credentials or cluster information in the atlas-application.properties file, you must create a new external source to reflect the permission levels for the new user.

Importing Atlas lineage

You can import Atlas lineage programmatically using a command on the Data Catalog node.

Use the following command to import Atlas lineage for a Hive table (on one line):

<AGENT-HOME>$ adapters/atlas/bin/importOperations -externalSource <Atlas external source>

-wdURL http://<ldc host>:8082

-wdUser <ldc user>

-ldcPassword <password>

[-virtualFolder <Hive source name>

[-path /<database>.<tablename>]]

For a lineage relationship to be imported, the parent and child tables must exist in the Data Catalog.

If the data source and table names are not specified, lineage is imported for all the tables in the Data Catalog.

This can take a long time depending on the number of tables in the repository.

If the table name is provided, the data source name is required. If there is a data source name, and no table name, lineage for all the tables in the data source is imported.

wdPassword passed to the command must be encrypted with the following

command:$ <AGENT-HOME> bin/ldc

encrypt

Exporting Lumada Data Catalog tags to Atlas

To export Data Catalog tag associations to Atlas, use the following command on the Data Catalog node.

<AGENT-HOME>$ adapters/atlas/bin/exportTagAssociations -externalSource <Atlas external source>

-wdURL http://<ldc host>:8082

-wdUser <ldc user>

-ldcPassword <password>

[-virtualFolder <Hive source name>

[-path /<database>.<tablename>]]

If the data source and table names are not specified, tag associations are exported for all the tables in the Data Catalog repository.

This can take a long time depending on the number of tables in the repository.

If the table name is provided, the data source name is required. If there is a data source name and no table name, tag associations for all the tables in the data source are exported.

wdPassword passed to the command must be encrypted with the following

command:<AGENT-HOME>$ bin/ldc

encrypt

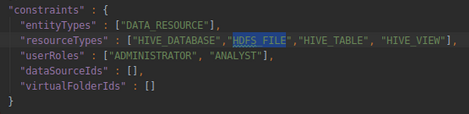

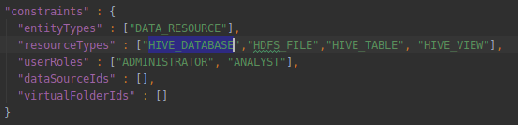

Exporting Lumada Data Catalog HDFS_PATH tags to Atlas

To export Data Catalog

HDFS_PATH tags to Atlas from Data Catalog UI, user has to add resourceTypes as

HDFS_FILE in atlas-plugin-descriptor.json for

export_associations placed at /app-server/ext/ to

allow Data Catalog UI to

show appropriate action.

User can use following command to export HDFS_PATH tags from

command line.

<AGENT-HOME>$ adapters/atlas/bin/exportTagAssociations -externalSource <Atlas external source>

-wdURL http://<ldc host>:8082

-wdUser <ldc user>

-ldcPassword <password>

[-virtualFolder <HDFS source name>

[-path /<HDFS Resource path>]]

Exporting Lumada Data Catalog HIVE DB level tags to Atlas

To export Data Catalog HIVE database level tags to Atlas from Data Catalog UI, user has to add

resourceTypes as HIVE_DATABASE in

atlas-plugin-descriptor.json for export_associations placed at

/app-server/ext/ to allow Data Catalog UI to show appropriate action.

User can use following command to export HIVE DB level tags from command line.

<AGENT-HOME>$ adapters/atlas/bin/exportTagAssociations -externalSource <Atlas external source>

-wdURL http://<ldc host>:8082

-wdUser <ldc user>

-ldcPassword <password>

[-virtualFolder <Hive source name>

[-path /<database>]]